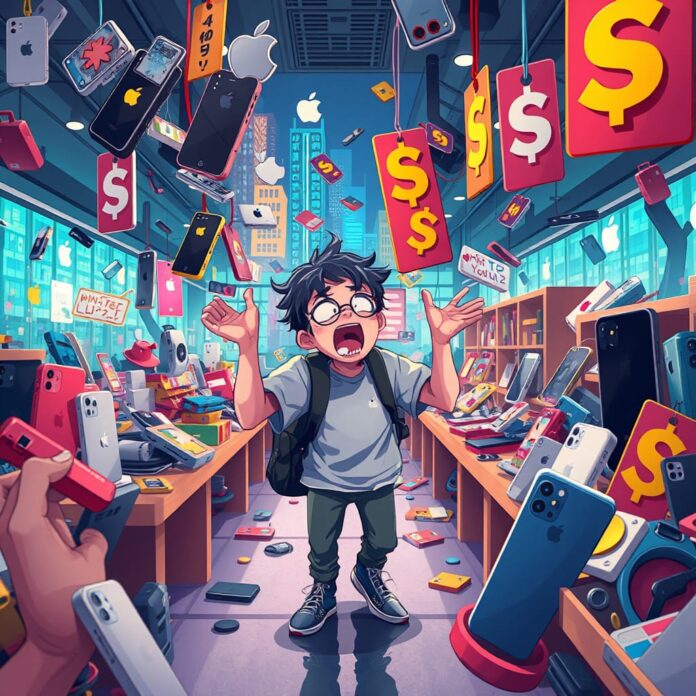

In what self-appointed tech historians are already calling the most predictable conflict since the invention of comment sections, the long-simmering tensions between Android users and iPhone devotees have erupted into full-scale digital warfare.

It was the age of infinite customization, it was the age of walled gardens; it was the epoch of open source rebellion, it was the epoch of premium subscription everything. In the sprawling digital landscape of 2025, two great tribes had emerged from the primordial soup of smartphone adoption, each convinced of their moral and technological superiority, each utterly baffled by the other’s existence.

On one side stood the Android Peasants—a scrappy confederation of budget-conscious rebels, tech tinkerers, and anyone who had ever uttered the phrase “but you can sideload apps.” On the other, the Apple Sheep grazed contentedly in their pristine ecosystem, their AirPods gleaming like tiny white flags of surrender to corporate benevolence, their loyalty as unshakeable as their monthly subscription payments.

The war began, as all great conflicts do, with a simple software update.

The Spark That Lit the Digital Powder Keg

The incident that would later be known as “Notification Gate” occurred on a Wednesday morning when Apple released iOS 18.7, featuring what the company described as “revolutionary message prioritization technology.” The update automatically sorted text messages by the sender’s device type, placing Android messages in a separate folder labeled “External Communications”—complete with a small green warning triangle that users swore looked suspiciously like a biohazard symbol.

Marcus Rootaccess, a prominent Android Peasant leader and moderator of seventeen different custom ROM forums, issued what would become known as the “Fragmentation Manifesto” within hours of the update’s release. “For too long,” he declared from his basement command center, surrounded by seven different Android devices running various stages of beta software, “we have tolerated the condescending smirks of the Apple Aristocracy. No more shall we endure their pitying glances when our messages appear in green bubbles of shame.”

The manifesto, which quickly went viral across Reddit, XDA Developers, and a surprisingly active Telegram channel called “Death to Proprietary Cables,” outlined a comprehensive strategy for what Rootaccess termed “digital class warfare.” The document detailed everything from coordinated review bombing of Apple apps to a sophisticated campaign of sending iPhone users increasingly complex Android customization screenshots designed to induce what psychologists were calling “choice paralysis anxiety disorder.”

The Apple Counter-Offensive

The response from the Apple Sheep was swift and devastating in its passive-aggressive precision. Led by Serenity Unboxwell, a lifestyle influencer whose Instagram bio simply read “Curated. Seamless. Superior.” and whose followers numbered in the millions, the Apple faithful launched what they called “Operation Aesthetic Intervention.”

The campaign was elegant in its simplicity: Apple users would respond to every Android customization post with a single, perfectly composed photograph of their iPhone’s home screen—unchanged from factory settings except for a carefully curated selection of premium apps, each icon a small monument to tasteful restraint and financial privilege.

“We don’t need to customize,” Unboxwell explained during a livestream from her minimalist studio apartment, where every surface was white and every device was Apple. “Perfection doesn’t require modification. That’s what separates the evolved from the… well, from those who think more options somehow equals better experience.”

The psychological warfare escalated quickly. Android Peasants began sharing screenshots of their battery usage statistics, highlighting their ability to replace batteries and use phones for more than two years without performance degradation. Apple Sheep countered with time-lapse videos of their seamless device synchronization across MacBooks, iPads, Apple Watches, and AirPods, each transition so smooth it bordered on the supernatural.

The Battle for the Moral High Ground

As the conflict intensified, both sides began claiming ethical superiority. The Android Peasants positioned themselves as digital freedom fighters, champions of open-source values and consumer choice. They created elaborate infographics showing the environmental impact of planned obsolescence, the economic benefits of device longevity, and the philosophical importance of user agency in the digital age.

“We’re not just fighting for our right to install custom keyboards,” declared Dr. Sideload McOpenSource, a computer science professor who had legally changed his name after a particularly intense debate about app store policies. “We’re fighting for the very soul of computing. Every locked bootloader is a small death of human potential. Every proprietary connector is a chain around the ankle of progress.”

The Apple Sheep, meanwhile, positioned their loyalty as a form of sophisticated consumer consciousness. They argued that their willingness to pay premium prices represented a mature understanding of value, quality, and the hidden costs of “free” alternatives. Their thought leaders spoke eloquently about the mental health benefits of reduced choice, the productivity gains of seamless integration, and the social responsibility of supporting companies that prioritized user privacy over advertising revenue.

“Simplicity is the ultimate sophistication,” proclaimed Harmony Ecosystem, Apple’s newly appointed Chief Philosophy Officer, during a keynote presentation that was simultaneously broadcast across all Apple devices worldwide. “While others fragment their attention across endless customization options, we focus on what truly matters: the elegant execution of essential functions, delivered through hardware and software designed in perfect harmony.”

The Escalation: Operation Green Bubble

The conflict reached a new level of intensity when the Android Peasants launched “Operation Green Bubble,” a coordinated effort to flood iMessage group chats with high-resolution images, large file attachments, and video messages that would automatically downgrade the entire conversation to SMS, turning everyone’s messages green and disabling read receipts.

The psychological impact was immediate and devastating. Apple Sheep across the globe reported symptoms ranging from mild anxiety to full-scale existential crisis as their carefully curated blue-bubble social circles dissolved into the chaos of cross-platform messaging. Support groups formed on Reddit, with names like “Survivors of Green Bubble Trauma” and “Healing from Mixed-Platform Group Chats.”

The Apple response was characteristically elegant and ruthlessly effective. They released a software update that would automatically detect when an Android user was added to a group chat and display a notification: “A non-optimized device has joined this conversation. Experience may and will definitely be degraded. Would you like to suggest alternative communication platforms to ensure optimal user experience for all participants?”

The Economics of Digital Tribalism

As the war raged on, economists began studying what they termed the “Platform Loyalty Paradox”—the phenomenon whereby consumers would make increasingly irrational purchasing decisions to maintain tribal allegiance. Android Peasants were observed buying flagship devices that cost more than iPhones, simply to avoid being associated with Apple’s “premium pricing strategy.” Apple Sheep, meanwhile, were purchasing multiple devices they didn’t need, including $400 wheels for their Mac Pro computers, as a form of loyalty signaling.

Market researchers identified a new consumer category: “Platform Agnostics,” individuals who used both Android and iOS devices depending on their specific needs. These digital Switzerland citizens were universally despised by both tribes, viewed as traitors lacking the moral conviction to choose a side in the great philosophical battle of our time.

The Unintended Consequences

The war had effects far beyond the mobile phone market. Dating apps reported a 300% increase in profile filters based on device preference. Real estate listings began including “iOS-optimized smart home systems” as selling points. Restaurants started offering separate sections for Android and iPhone users, claiming it reduced dining room tension and improved overall customer satisfaction.

Perhaps most surprisingly, the conflict spawned an entire industry of “Digital Diplomacy” consultants—professionals trained to facilitate communication between mixed-platform households and workplaces. These specialists, commanding fees of up to $500 per hour, would mediate disputes over everything from family photo sharing protocols to collaborative document editing platforms.

The Philosophy of Technological Tribalism

As the war entered its second year, academic institutions began offering courses in “Platform Psychology” and “Digital Anthropology.” Researchers identified the conflict as a manifestation of deeper human needs for identity, belonging, and meaning in an increasingly complex technological landscape.

Dr. Binary Choicefield, a leading expert in consumer technology psychology, published a groundbreaking study suggesting that smartphone preference had become a more reliable predictor of political affiliation, dietary choices, and relationship compatibility than traditional demographic markers. “We’re not just choosing phones,” she explained. “We’re choosing identities, value systems, and entire worldviews. The Android versus iPhone debate is really a proxy war for fundamental questions about freedom versus security, complexity versus simplicity, and individual agency versus collective harmony.”

The study’s most controversial finding was that both tribes exhibited identical psychological patterns: confirmation bias, in-group favoritism, and what researchers termed “technological Stockholm syndrome”—the tendency to defend corporate decisions that directly contradicted users’ stated preferences and interests.

The Future of the Mobile Cold War

As this report goes to press, both sides are preparing for what military analysts are calling “The Great Convergence”—a predicted future state where Android and iOS become functionally identical, leaving their respective tribes fighting over increasingly meaningless distinctions. Intelligence sources suggest that both Google and Apple are secretly developing “Platform Neutrality Protocols” designed to gradually reduce the differences between their operating systems, potentially ending the war through technological détente rather than decisive victory.

However, tribal leaders on both sides have vowed to find new battlegrounds. Early skirmishes have already begun over foldable phone designs, AI assistant personalities, and the philosophical implications of different approaches to augmented reality interfaces. Some experts predict that the Android Peasants and Apple Sheep will eventually unite against a common enemy: the emerging tribe of “Linux Phone Purists,” whose numbers remain small but whose ideological purity is considered a threat to the established order of consumer technology tribalism.

The war continues, fought in comment sections and group chats, in family dinners and corporate boardrooms, in the hearts and minds of consumers who just wanted a device to make phone calls and somehow found themselves enlisted in the most passionate, pointless, and perfectly human conflict of the digital age.

Which side of the great mobile divide do you find yourself on? Are you a proud Android Peasant fighting for digital freedom, a sophisticated Apple Sheep enjoying curated excellence, or one of those diplomatically dangerous Platform Agnostics? Share your war stories, conversion experiences, or peace proposals in the comments—just remember to specify which device you’re using to type your response.

Support the Resistance (Against All Platforms)

If this exposé of the great mobile war made you laugh, cry, or suddenly question whether your phone choice defines your entire personality, consider supporting TechOnion with a donation of any amount. Unlike the warring tech giants we chronicle, we promise to remain platform-agnostic in our satirical coverage—we mock Android and iOS with equal opportunity disdain. Your contribution helps us continue documenting the absurdities of digital tribalism, one unnecessarily passionate comment thread at a time. Because in a world divided by green and blue bubbles, someone needs to stay in the purple zone of satirical neutrality.